AI Reads Your Mind; World Model Launches; NYT vs Your Chats

AI Just Learned To Caption Thoughts

Researchers at NTT Communication Science Laboratories unveiled the first system that turns human brain activity into full-sentence visual descriptions. Using fMRI data and a masked-language-model pipeline, the system reconstructs what a person is watching by iteratively refining semantic guesses until they match the underlying neural patterns. Unlike previous one-word decoding attempts, the model generates detailed statements like “a person jumps over a deep waterfall on a mountain ridge.”

The breakthrough shows AI can bridge raw neural signals with structured language without relying on massive human-caption datasets, marking a major evolution in noninvasive brain interpretation.

Why It Matters:

This takes mind decoding from “neat parlor trick” to “proto communication channel,” raising big implications for healthcare, accessibility and neurointerfaces. It also signals a future where AI fills in semantic gaps from partial or noisy signals, not just text.

The Deets:

- Uses fMRI scans combined with a language model fine-tuned for masked reasoning

- Generates full-sentence descriptions, not single-word guesses

- Entirely noninvasive, unlike electrode-driven approaches

- Demonstrates semantic inference rather than direct pattern matching

- First system to create structured visual language from raw neural activity

Key Takeaway:

Neuralink is teaching the brain to speak; this system is teaching it to narrate.

🧩 Jargon Buster: Masked Language Modeling - A training method where parts of a sentence are hidden and the model predicts the missing words, helping it understand context and semantics.

More: AI Secret

🌎 World Models

Fei Fei Li’s World Labs Launches Marble

AI “godmother” Fei Fei Li launched Marble, World Labs’ first commercial world model capable of generating editable 3D environments from text, images, or video. Unlike image or video tools, Marble produces persistent scenes that can be modified, expanded, exported, or used in real creative workflows. The platform ships with freemium tiers, paid options and export formats for Gaussian splats, meshes and cinematic outputs.

Marble pairs with Li’s recent spatial-intelligence manifesto, which argues that AI must understand 3D physics to progress beyond language-level reasoning.

Why It Matters:

This is the moment world models jump from research hype to real creative tools, opening paths in robotics, architecture, VFX, simulation and gameplay design.

The Deets:

- Generates or edits 3D worlds from text, images, or layouts

- Exports to meshes, splats, or video for downstream workflows

- Ships with freemium plus paid tiers starting at $20 per month

- Built atop spatial-intelligence research Li has been championing

- Positions World Labs ahead of Google’s Genie and Decart

Key Takeaway:

World models are no longer demos; they are now design tools.

🧩 Jargon Buster: Gaussian Splatting - A graphics technique that renders 3D scenes using fuzzy “splat” primitives instead of polygons, allowing fast, photorealistic reconstruction.

More: The Rundown AI

🏛️ Labor & Regulation

DeepSeek Publishes A Challenge: Confess Which Jobs AI Will Kill

At China’s Wuzhen World Internet Conference, DeepSeek broke months of silence by challenging all AI labs to openly document which human jobs their models will displace first. The company framed it as an ethical duty, but the move strategically pressures frontier labs with higher costs, slower cycles, and bigger regulatory targets.

It also positions DeepSeek as the low-cost insurgent pushing for radical transparency while rivals navigate political scrutiny and billion-dollar burn rates.

Why It Matters:

DeepSeek’s call shifts the labor debate from “should AI take jobs?” to “admit which ones you are targeting.” That forces accountability conversations many labs would prefer to avoid.

The Deets:

- Challenge delivered at Wuzhen Internet Conference

- Pushes labs to publish job-loss predictions

- Leverages DeepSeek’s lower-cost advantage

- Strategically raises regulatory expectations for rivals

- Reignites global debate about AI-driven unemployment

Key Takeaway:

It is less a moral plea and more a pressure tactic to raise the bar for competitors.

🧩 Jargon Buster: Transparency Norms - Unwritten industry expectations that companies openly disclose risks, impacts, and model behavior.

More: AI Secret

🔒 Legal & Privacy

OpenAI Battles Court Order To Hand Over 20M ChatGPT Logs

OpenAI is appealing a ruling requiring it to turn over 20 million anonymized ChatGPT conversations to the New York Times for discovery in a copyright lawsuit. The NYT originally requested 1.4 billion logs but narrowed the request to a representative sample.

OpenAI argues that even anonymized conversations constitute an invasion of user privacy and that almost all requested logs have nothing to do with the NYT’s claims.

Why It Matters:

This case could set a precedent for how user data, training data and chat logs are treated in lawsuits involving generative models. It also tests consumer expectations of AI privacy.

The Deets:

- Judge ruled the logs are appropriate discovery material

- NYT narrowed the ask from 1.4B to 20M chats

- OpenAI claims 99.99 percent irrelevant to the case

- Filed an appeal framing it as a privacy threat

- Could shape future AI confidentiality standards

Key Takeaway:

This fight is not just about copyright; it is about who owns conversational history in the AI age.

🧩 Jargon Buster: Discovery - The legal process where each party in a lawsuit can request evidence from the other side.

More: The Rundown AI

🧠 Frontier Robotics

XCath Performs First Brain Aneurysm Robotic Surgery

Houston-based XCath completed the world’s first robot-assisted treatment of brain aneurysms using its EVR endovascular robot.

Surgeons in Panama remotely guided ultra-fine catheters through cerebral vessels with sub-millimeter accuracy, placing stents and devices to repair multiple aneurysms.

It is only the second intracranial robotic surgery ever performed and the first to treat the condition rather than demonstrate feasibility.

Why It Matters:

This opens the door to remote neurovascular care, where geography no longer limits access to life-saving procedures.

The Deets:

- First robot to perform intracranial aneurysm repair

- Triaxial catheter system guided with sub-millimeter precision

- Supports both local and remote operation

- Demonstrated multi-aneurysm treatment in one session

- Sets new benchmark for neurovascular robotics

Key Takeaway:

Steady hands are no longer a human monopoly; they are becoming a software feature.

🧩 Jargon Buster: Endovascular Robotics - Robotic systems that navigate blood vessels to treat conditions from inside the vascular network.

More: Robotics Herald

💬 Models & Products

OpenAI’s GPT-5.1 Launch Automates Personality Prefs

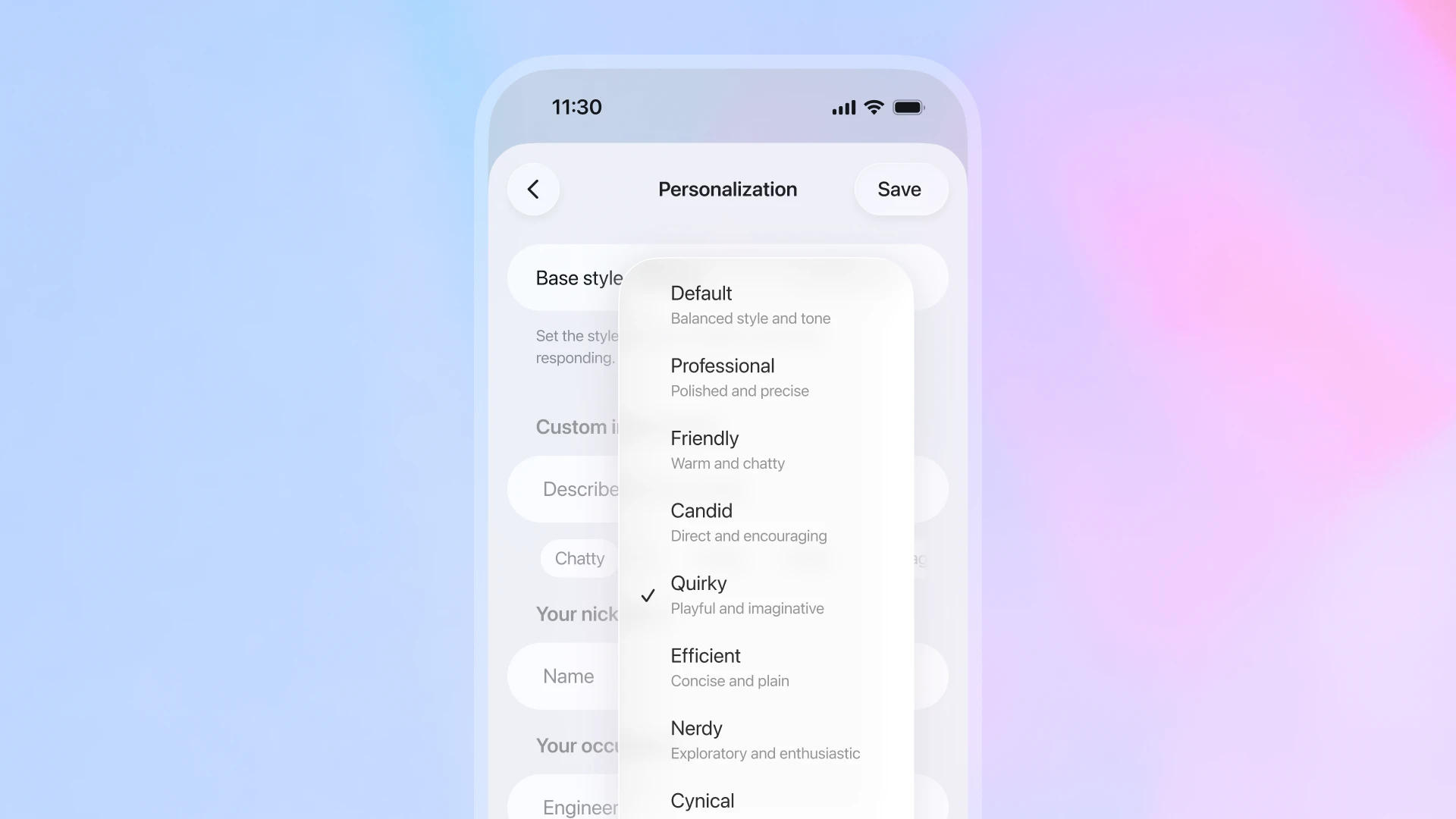

OpenAI launched GPT-5.1 with two modes (Instant and Thinking) and eight personality presets, offering quick sliders for tone and warmth.

Users immediately noted that power users have been customizing style via prompting for years, making the feature feel like UI frosting instead of a real capability upgrade.

The release shipped with little benchmarking, fueling speculation that OpenAI rushed the rollout ahead of a competitive release cycle.

Why It Matters:

It hints at a strategic shift toward incremental updates rather than dramatic model leaps, while also highlighting user expectations that personalization should come from deeper reasoning, not surface-level vibes.

The Deets:

- Eight presets including Professional, Friendly, Quirky, and Cynical

- Two model modes: 5.1 Instant and 5.1 Thinking

- Experimental settings for emoji use, warmth, scalability

- Sparse benchmarks compared to prior OpenAI releases

- Arrives amid rising competition from Gemini and ERNIE

Key Takeaway:

OpenAI added moods when users were expecting minds.

🧩 Jargon Buster: Tone Presets - Predefined instructions that stylize the AI’s writing without changing underlying reasoning.

More: AI Secret

In 2012, Google released the Knowledge Graph beyond internal search teams, quietly shifting the web from keyword lookup to entity-level understanding. That same shift now powers LLM grounding, agent workflows, and the structured memory systems AI relies on today.

Today’s Sources AI Secret, The Rundown AI, Robotics Herald