AI Safety Call; 'Backstop' Gate Continues; 75% Cos See Positive AI ROI

OpenAI Calls For Superintelligence Safety

OpenAI outlined a path to prepare for systems that can make meaningful scientific discoveries within three years and potentially much larger breakthroughs by 2028. The company argues that current models already rival top humans on complex intellectual tasks and that falling “intelligence costs” could unlock rapid progress.

OpenAI is pushing for global coordination across governments, standards bodies and labs to reduce risks from misuse, runaway capabilities and concentrated power. It also wants a resilience ecosystem, similar to cybersecurity, to monitor and respond to real-world impacts as they emerge.

Why It Matters:

If discovery-grade systems appear on the proposed timeline, the bottleneck shifts from raw capability to governance, safety standards and societal readiness. Coordinating rules across competing labs and jurisdictions will determine whether benefits scale widely or remain concentrated.

The Deets:

- Targets: small scientific discoveries by 2026, significant breakthroughs by 2028.

- Claims: systems are roughly “80% of the way to an AI researcher.”

- Priorities: shared safety standards, government partnerships, real-world impact tracking.

- Framing: build a resilience ecosystem, not one-time safeguards.

Key Takeaway:

OpenAI is redefining “readiness” as institutions that evolve with capabilities, not static guardrails.

🧩 Jargon Buster: Superintelligence - an AI system that outperforms top humans across most economically valuable tasks, including scientific discovery.

More: The Rundown AI

⚙️ Power Plays

Markets Flinch At “Backstop” Talk, Credibility Becomes Scarce

I would like to clarify a few things.

— Sam Altman (@sama) November 6, 2025

First, the obvious one: we do not have or want government guarantees for OpenAI datacenters. We believe that governments should not pick winners or losers, and that taxpayers should not bail out companies that make bad business decisions or…

After OpenAI’s CFO floated the idea of a financing “ecosystem” that some heard as a government backstop, tech markets erased hundreds of billions in value. Nvidia and Microsoft slid, and OpenAI leadership moved quickly to clarify that it neither needs nor wants guarantees. The episode exposed investor sensitivity to the industry’s debt, energy needs, and multi-year compute commitments.

Why It Matters:

Capital scarcity can slow model training and data center buildouts. Confidence, not just compute, underwrites this cycle. One phrase can raise the cost of capital for the entire sector.

The Deets:

- Immediate selloff tied to perceived bailout optics.

- Altman response: oppose guarantees, emphasize revenue trajectory.

- Context: trillion-scale capex for compute and power creates macro exposure.

- Lesson: communication risk is now balance-sheet risk.

Key Takeaway:

AI’s tight resource loop means narrative control is financial control.

🧩 Jargon Buster: Backstop - a credit or guarantee that limits private losses, often involving public support.

More: AI Secret

🧩 Products & How-To

Get The Most Out Of ChatGPT’s Deep Research

Deep Research automates multi-step web analysis into a single workflow that collects sources, synthesizes findings, and outputs a structured, cited report you can export or attach to a custom GPT for monitoring.

Why It Matters:

It turns ad-hoc digging into an auditable research pipeline, useful for market scans, competitor tracking or policy monitoring.

The Deets:

- Activate Deep Research in a new chat, describe goals and success metrics.

- Agent runs multi-stage browse, ranks sources, compiles evidence.

- Track progress live, interrupt, or inject new context mid-run.

- Export to PDF or link, reuse as a living brief inside a custom GPT.

🧩 Jargon Buster: Agentic Workflow - a model-driven process that plans steps, calls tools, and updates goals based on intermediate results.

More: The Rundown AI University

📊 Strategy & Org

McKinsey’s 2025 AI Reality Check: Pilots Everywhere, Impact Is Scarce

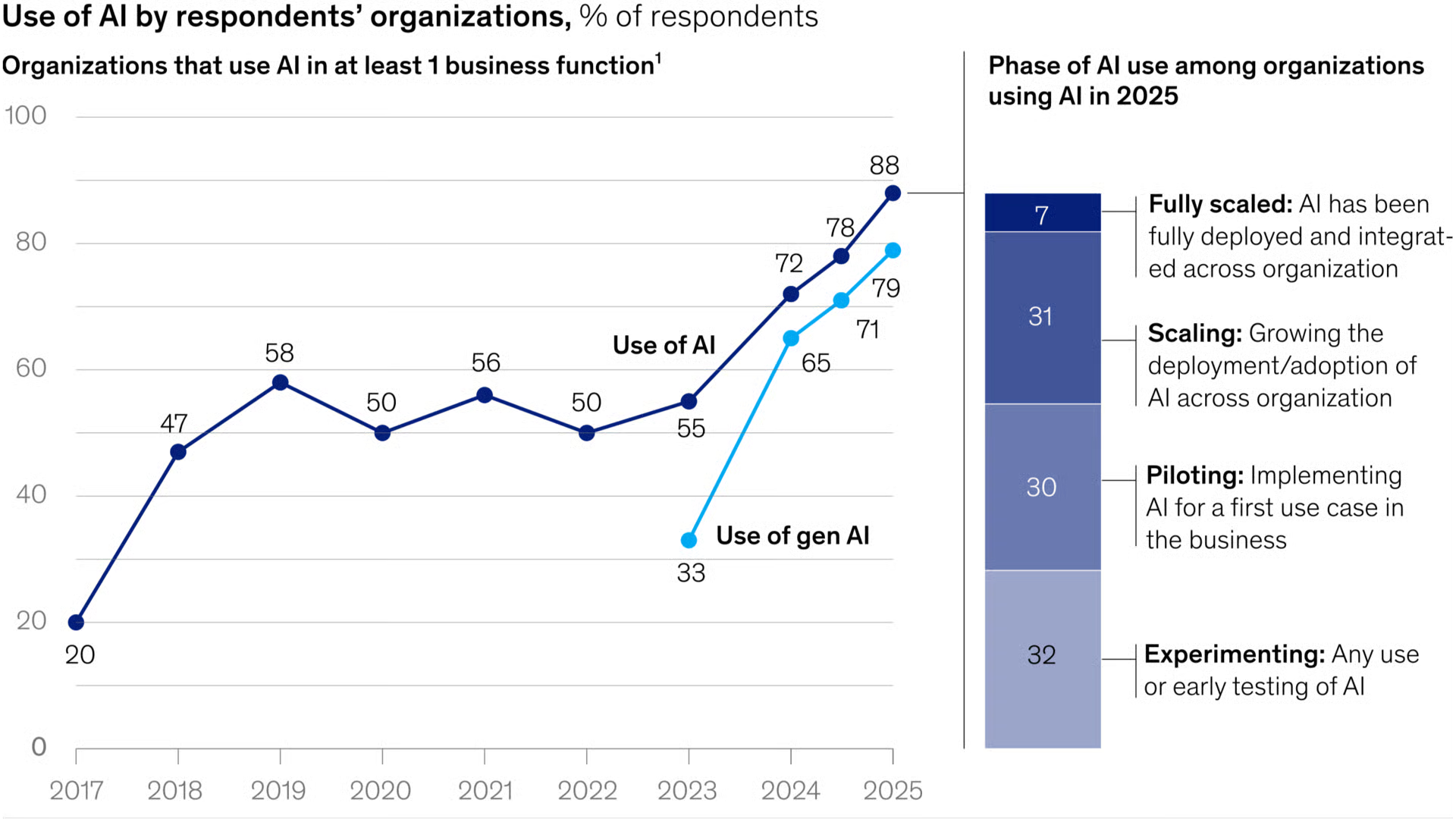

A McKinsey survey of nearly 2,000 organizations finds almost everyone uses AI somewhere, yet only a third scale it, and just a small minority see material EBIT impact. Agent adoption is early, with most activity in IT and knowledge management.

Why It Matters:

Winners treat AI as workflow redesign, not bolt-on automation. Budget increases will follow demonstrable, cross-functional outcomes.

The Deets:

- 88% use AI, 33% scale it, 39% report EBIT impact, only 6% see 5% or more.

- 62% exploring agents, 23% scaling, concentrated in IT and KM.

- Workforce: 32% expect net reductions of 3% or more next year.

- Pattern: high performers integrate across teams and products.

Key Takeaway:

Impact correlates with operating-model change, not tool count.

🧩 Jargon Buster: EBIT Impact - effect of AI on earnings before interest and taxes, a common profitability metric.

More: The Rundown AI

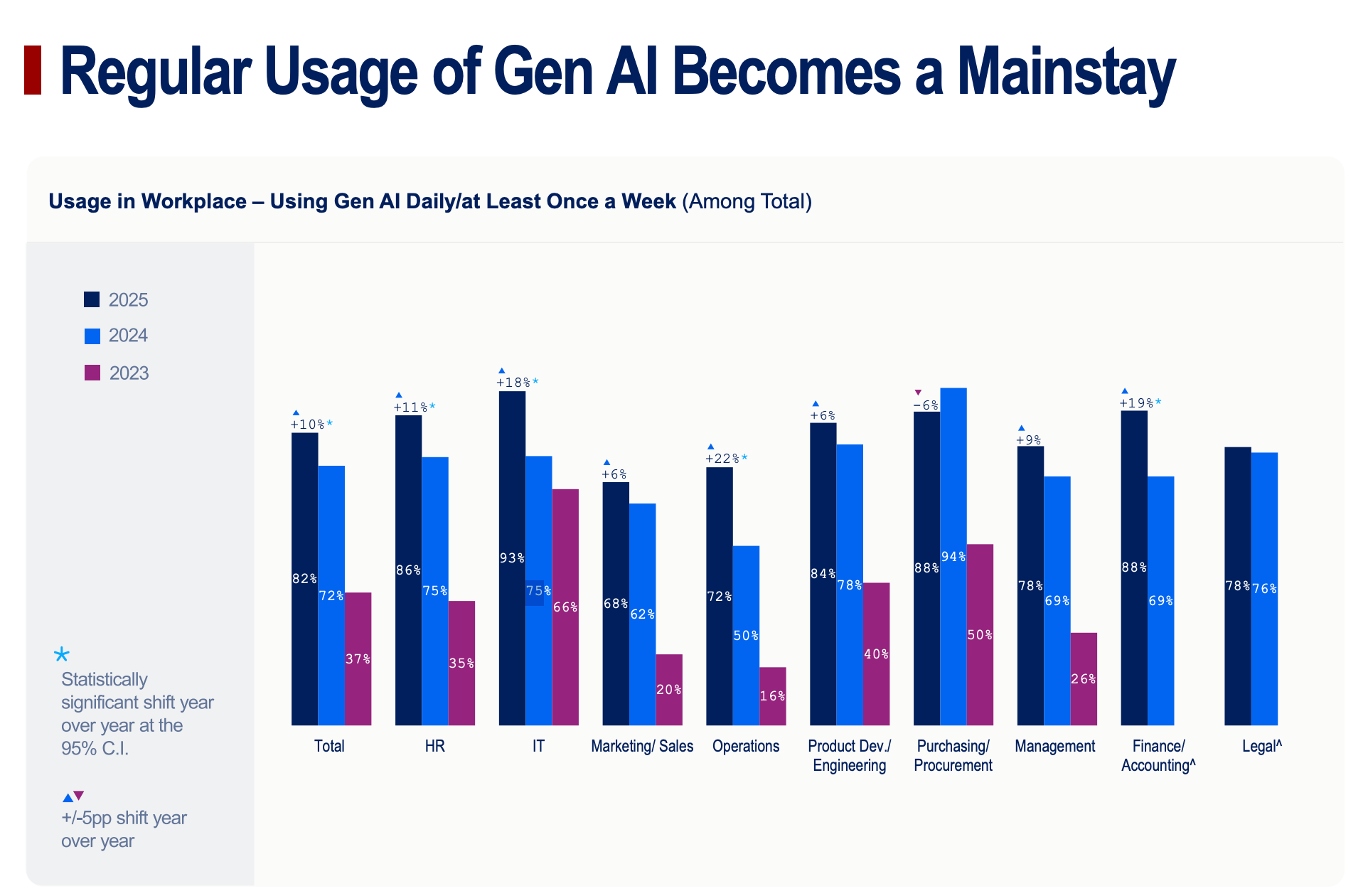

Wharton Study: 75% Companies See Positive GenAI ROI ... 83% Banks

Wharton just released a three-year study of 800 U.S. businesses examining the financial return on generative AI.

Results show 75% of companies report positive ROI, with 83% of banks leading the pack. The study arrives as markets cool on AI hype but highlights that real value is emerging from pragmatic, productivity-driven deployments.

Why It Matters:

Despite fears of an “AI bubble,” this data signals the opposite - that AI adoption is maturing into measurable financial performance. The report suggests:

- Tier-2 and Tier-3 firms, not tech giants, are currently seeing the strongest ROI.

- Simpler, off-the-shelf GenAI tools deliver quicker payoffs than complex custom builds.

- The main friction point isn’t technology but culture and training, with many firms lagging in workforce readiness.

The Deets:

- Everyday AI: Gen AI use is mainstream. Daily adoption spans IT, procurement, and marketing. Leaders emphasize open access, faster rollout, and clearer guardrails.

- Proving Value: Enterprises are shifting from pilots to proven programs. Roughly three in four see positive ROI, with Tech/Telecom (88%) and Banking/Finance (83%) topping returns.

- The Human Capital Lever: C-suite commitment is rising, yet people and processes remain the constraint. Only 61% offer AI training programs, and hiring skilled talent remains difficult.

Key Takeaway:

AI isn’t a bubble if it’s producing tangible returns. The winners are those pairing accessible tools with strong human-capital investment, turning everyday automation into enterprise advantage.

⚡ Quick Hits

OpenAI expands Codex with GPT-5-Codex-Mini and higher rate limits. More...

Vertex AI Agent Builder adds context management, one-command deploys, observability. More...

OpenAI asks the administration to expand Chips Act credits to AI data center components. More...

Google launches File Search Tool for managed RAG pipelines inside Gemini. More...

🧰 Tools Of The Day

- Miro - AI turns rough concepts into roadmaps, diagrams, and decks.

- Delve AI - Digital twins for customer insights with conversational Q&A.

- Teal - Job search assistant that auto-tailors resumes to postings.

- Nyota - AI meeting notes that sync across CRM and project tools.

- Guru - Company knowledge base with verified answers and citations.

- Scite - Research assistant that grounds claims in real citations.

- Attio - AI-native CRM for complex, multi-step workflows.

- Vyrill - Automates creator discovery and video curation for marketing.

- Linkeddit - Surfaces high-intent prospects from Reddit conversations.

- TattooRed - Conversational design assistant for tattoo decisions.

More here: TAAFT

On This Day In AI History: In 2015, Google open sourced TensorFlow, accelerating the modern AI tooling ecosystem and catalyzing today’s model and agent boom.

Today’s Sources: The Internet, There’s An AI For That, AI Secret, The Rundown AI