AI Standards Grab; Microsoft 'Cancer Maps,' Deep Fake Insurance

Today's AI forecast: 🌤️

AI Giants Move To Write Agents Rulebook

OpenAI, Anthropic, and Block formed the Agentic AI Foundation (AAIF) under the Linux Foundation, and they did not come empty-handed. Each is donating its flagship agent framework: Anthropic’s Model Context Protocol (MCP), OpenAI’s AGENTS.md, and Block’s Goose. Within days, Microsoft, Google, AWS, Bloomberg, Cloudflare and others lined up as supporting members.

On paper, AAIF is a "neutral" standards hub for agentic AI: how models talk to tools, services, and each other. In practice, it is the world’s most powerful AI vendors racing to define a shared playbook for how agents act, transact, and coordinate across the internet before regulators even agree on what an "autonomous agent" legally is. Whoever standardizes the language of agents likely influences the laws about agents later.

Why it matters

If you control the standard, you control the rails. A shared protocol for agents means:

- Developers get easier interoperability and fewer one-off integrations.

- Platforms get network effects as more tools plug into the same ecosystem.

- Regulators will feel pressure to ratify what already works in the wild.

MCP is already integrated into ChatGPT, Cursor, Gemini, VS Code and over 10,000 public servers, which means AAIF is not starting from zero. The risk is that "open" standards quietly centralize power around a handful of companies that effectively decide how agents should behave.

The Deets

- Structure: AAIF is a Linux Foundation project, marketed as neutral governance and modeled after infrastructure efforts like PyTorch and Kubernetes.

- Core contributions:

- MCP: A protocol that lets models talk to tools, databases, and services through a standard interface.

- AGENTS.md: OpenAI’s spec for defining what an agent can do and how it should act.

- Goose: Block’s framework for building modular, programmable agents.

- Backers: Early support from Google, Microsoft, AWS, Bloomberg, Cloudflare signals that this could become the de facto agent interoperability layer.

Key takeaway

This is less "open-source kumbaya" and more a preemptive standards land grab. The smartest way to avoid regulation is to invent the rules first and label them as "open."

🧩 Jargon Buster - Agentic AI: AI systems that do not just answer questions but take actions on your behalf - calling APIs, modifying files, trading, or managing workflows, often with only high-level human instructions.

Source: AI Secret, The Rundown AI

Microsoft Turns $10 Tissue Slides Into Cancer Maps

Microsoft released and open-sourced GigaTIME, an AI model that effectively turns a basic $10 tissue slide into a virtual cancer atlas. Instead of needing expensive immune profiling and days of lab work, researchers can feed in standard pathology slides and get thousands of dollars worth of tumor insights in seconds.

The model learned from 40 million cell samples from Providence Health, pairing simple slides with advanced immune system scans. Tested across 14,000 cancer patients, GigaTIME built a virtual library of 300,000 tumor images spanning 24 cancer types, revealing 1,200-plus patterns that link immune activity to cancer stage and survival. This is not a niche lab trick. It is a blueprint for population-scale cancer analysis powered by ordinary clinical data.

Why it matters

Cancer research has long been constrained by costly, slow, and specialized lab assays. GigaTIME pushes a different paradigm:

- Use routine clinical data you already collect.

- Apply heavy AI to approximate what expensive tests would have revealed.

- Run this at population scale so patterns emerge that individual labs would never see.

That shift unlocks advanced research and potentially treatment decision support in hospitals that cannot afford cutting-edge wet lab infrastructure.

The Deets

- Training data: 40M cells from Providence Health, pairing basic slides with immune scans.

- Scale: 14,000 patients, 300,000 detailed tumor images, 24 cancer types.

- Insights: Over 1,200 correlations between immune system behavior and clinical factors like stage and survival.

- Availability: The model is open source, which lowers the barrier for academic labs and hospitals to experiment with it.

Key takeaway

AI is turning cheap data into premium insights. If a $10 slide can approximate what used to take specialty labs and big budgets, the real constraint becomes who can deploy and interpret the models, not who can buy the machines.

🧩 Jargon Buster - Tumor microenvironment: The mix of immune cells, blood vessels, and surrounding tissue that sits around a tumor. How it behaves can matter as much as the tumor itself for predicting how a cancer will grow or respond to treatment.

Source: The Rundown AI

♟️ Power Plays

The Pentagon Plugs Gemini Into GenAI.mil

The Pentagon launched GenAI.mil, its first military-grade AI platform, and picked Google Cloud’s Gemini as the inaugural model. Publicly, the Department of Defense is framing it as an efficiency upgrade for the bureaucracy: summarizing policy manuals, drafting risk assessments, and wrangling unclassified documents. Privately, it is a signal that frontier AI models are becoming strategic infrastructure, not just productivity toys.

Defense Secretary leadership is selling this as making the force "more lethal" while Google emphasizes usage limits: only unclassified data, no training on military inputs, and guardrails around use cases. Even so, the Pentagon has confirmed that more models will be added, and every major frontier player knows that saying "no" is complicated when national security deals become a proxy for "trusted AI."

Why it matters

Once the Pentagon standardizes on AI platforms:

- Neutrality is over: Big models become part of national infrastructure and strategy.

- Procurement becomes power: A defense contract is not just revenue, it is brand validation for "safe" and "serious" AI.

- Policies will follow the tools: Military and government usage patterns often cascade into civilian norms and regulations.

The concern is not that Gemini is suddenly targeting systems. It is that normalizing AI for planning, paperwork and analysis in one of the world’s largest defense organizations makes it politically inevitable that all major labs will plug in, whether loudly or quietly.

The Deets

- Platform: GenAI.mil is the Department of Defense’s central generative AI environment.

- Model: Google’s Gemini is the first integrated model.

- Scope: Unclassified data only, explicit commitment that military data will not be used to further train the model.

- Roadmap: More models from other labs are expected, turning GenAI.mil into a multi-model AI stack for the military.

Key takeaway

When the Pentagon adopts generative AI, every major model eventually lines up. The battlefield for AI influence now runs through RFPs, security clearances, and procurement checklists.

🧩 Jargon Buster - GenAI platform: A centralized environment where an organization can deploy, manage, and monitor multiple generative AI models and tools under common policies and security controls.

Source: AI Secret, The Rundown AI

Regulators, Royalties And Google’s AI Scrutiny

While big tech races ahead on agents and defense deals, regulators are waking up to the input side of AI: whose content trains models and who gets paid. The European Union has opened a new investigation into whether Google’s AI summaries and "AI Mode" are using content from websites and YouTube without proper consent or compensation, and whether that behavior harms competition.

At the same time, India is floating a mandatory royalty regime that would let AI firms train on all copyrighted content as long as they pay creators. On the political front, U.S. state leaders from both parties are pushing back against Donald Trump’s proposed federal AI moratorium, arguing that Washington should not override the more nuanced AI rules already passed at the state level.

Why it matters

We are watching three fault lines solidify:

- Who controls training data: The EU’s case against Google is a proxy for how far platforms can go in repackaging web and video content into AI outputs.

- Who gets paid: India’s plan envisions AI as a compulsory license environment, where training is allowed but comes with built-in royalties.

- Who writes the rules: U.S. states are signaling they will not quietly surrender AI policy to sweeping federal bans or moratoriums.

These moves will shape which business models are viable. Models that depend heavily on scraped proprietary content may face more lawsuits or licensing costs.

The Deets

- EU: Investigating whether Google’s AI search summaries and AI Mode misuse website and video content without consent or payment and whether that hurts rivals.

- India: Proposing a royalty system that lets AI firms train on copyrighted works while forcing payments to creators.

- United States: Bipartisan state-level resistance to a proposed federal AI moratorium reflects a desire to preserve existing state AI regimes.

Key takeaway

The training data free-for-all is ending. The fight is shifting from "Can we crawl this?" to "How much do we pay and who decides?"

🧩 Jargon Buster - AI royalty regime: A legal framework where AI companies can use copyrighted material for training but must pay standardized, regulated fees to the rights holders.

Source: AI Secret, The Rundown AI

🧰 Tools & Products

Coalition Turns Deepfakes Into Insurable Events

Cyber insurer Coalition is taking the opposite approach of traditional carriers that are stuffing policies with "AI exclusions." It launched a Deepfake Response Endorsement that explicitly covers AI-powered reputation attacks: fake CEO videos, fabricated employee rants, or synthetic scandals that go viral. When it triggers, Coalition pays out, then deploys forensics, legal teams, and PR to contain the damage.

Rather than treating AI incidents as unpriceable "acts of God," Coalition treats them as operational cyber events. The difference is its data. Coalition runs an Active Data Graph that tracks attack surfaces, breach intel, and threat signals in real time. That telemetry lets it underwrite risks that static actuarial tables cannot touch.

Why it matters

If deepfakes are inevitable, the market will not wait for perfect regulation. It will price the chaos. Coalition’s move signals that:

- AI incidents are becoming standard line items in cyber coverage.

- Insurers with real-time security data will eat incumbents that rely on backward-looking loss histories.

- Companies can treat deepfake events as manageable operational risks, not existential surprises.

The Deets

- Product: Deepfake Response Endorsement folded into Coalition’s cyber offerings.

- Coverage: Forged executive videos, fake employee content, reputational attacks.

- Response package: Digital forensics, legal support, PR crisis comms.

- Differentiator: Pricing driven by live telemetry from its Active Data Graph, not static underwriting.

Key takeaway

Coalition is not just protecting against deepfakes, it is monetizing their inevitability, turning synthetic identity crises into an asset class that old-school insurers cannot model.

🧩 Jargon Buster - Endorsement (insurance): An add-on to an insurance policy that changes or expands coverage for specific risks without rewriting the entire policy.

Source: AI Secret

Factory Robots, Now With Lego Energy

German motion plastics company igus unveiled ReBeLMove Pro, a modular mobile base that can morph into a picker, conveyor shuttle, or cobot carrier in minutes. It drives up to 2 meters per second, carries 250 kilograms, tows nearly a ton, and runs an 8-hour shift on a single charge. Setup reportedly takes 15 minutes, and no coding or expensive integrator is required.

The kicker is price. At about €38,900, ReBeLMove Pro undercuts many autonomous mobile robots by roughly 25 percent while still targeting industrial environments. Instead of selling bespoke, brittle automation, igus is basically shipping a modular robot kit that factories can reconfigure whenever workflows change.

Why it matters

For small and mid-size manufacturers, automation historically meant long projects, custom integrators, and sunk costs that break when the line changes. A universal base that can:

- Take different attachments,

- Plug into ERP and fleet management tools, and

- Be reconfigured on-site

turns robots into ordinary equipment instead of science projects. That is a big psychological and financial unlock for factories that sat out the first wave of automation.

The Deets

- Specs: 2 m/s speed, 250 kg payload, nearly 1 ton towing, full-shift battery life.

- Setup: Around 15 minutes, no coding.

- Use cases: Picker, shuttle, cobot carrier, general material handling.

- Positioning: 25 percent cheaper than many AMRs while keeping industrial-grade performance.

Key takeaway

The future of factory automation might look less like sci-fi and more like Lego Technic for grown-ups: one base, many attachments, infinite reconfigurations.

🧩 Jargon Buster: Cobot - A collaborative robot designed to safely share a workspace with humans, usually smaller, slower, and easier to deploy than traditional industrial robots.

Source: Robotics Herald

💸 Funding & Startups

Robotics, Creatives And Consumer Debt Get Their AI Moment

AI is shipping models but also it seeding whole new product categories across industries. Deep Robotics raised $68M to scale from quadrupeds to humanoids and embodied AI systems on the way to an eventual IPO. On the creative side, Dapple launched a platform to help agencies and studios manage submissions for awards, contests, and pitches.

In fintech, BON Credit released an AI-driven app that analyzes credit card data and generates personalized plans for reducing debt and interest, while TubeGuide launched a tool that converts YouTube tutorials into step-by-step guides. Each of these answers a mundane but valuable question: "What if the workflow we already do every day had an AI co-pilot baked in?"

Why it matters

This is the "boring is big" phase of AI startups. Rather than chasing general intelligence, these companies are:

- Wrapping AI around very specific workflows (creative submissions, YouTube learning, credit payoff).

- Targeting measurable outcomes like time saved, debt reduced, conversion improved.

- Building product moats from data and process, not just model weights.

On the robotics front, big rounds like Deep Robotics’ point to a near-future where humanoids and quadrupeds move from viral demos to line items on corporate capex sheets.

The Deets

- Deep Robotics: Raised $68M to expand from quadrupeds toward humanoids and embodied AI at IPO scale.

- Dapple: Platform for creative industries to manage awards, contests, and pitch submissions.

- BON Credit: Consumer app that mines credit card histories to build personalized payoff and interest reduction plans.

- TubeGuide: Converts YouTube tutorial videos into structured, actionable guides.

Key takeaway

The next wave of AI value will not come from the fanciest models, it will come from boring workflows that suddenly feel magical.

🧩 Jargon Buster - Embodied AI: AI systems that are embedded in physical robots, giving models a body to act in the real world rather than just generating text or images.

Source: AI Secret, Robotics Herald

🧪 Research & Models

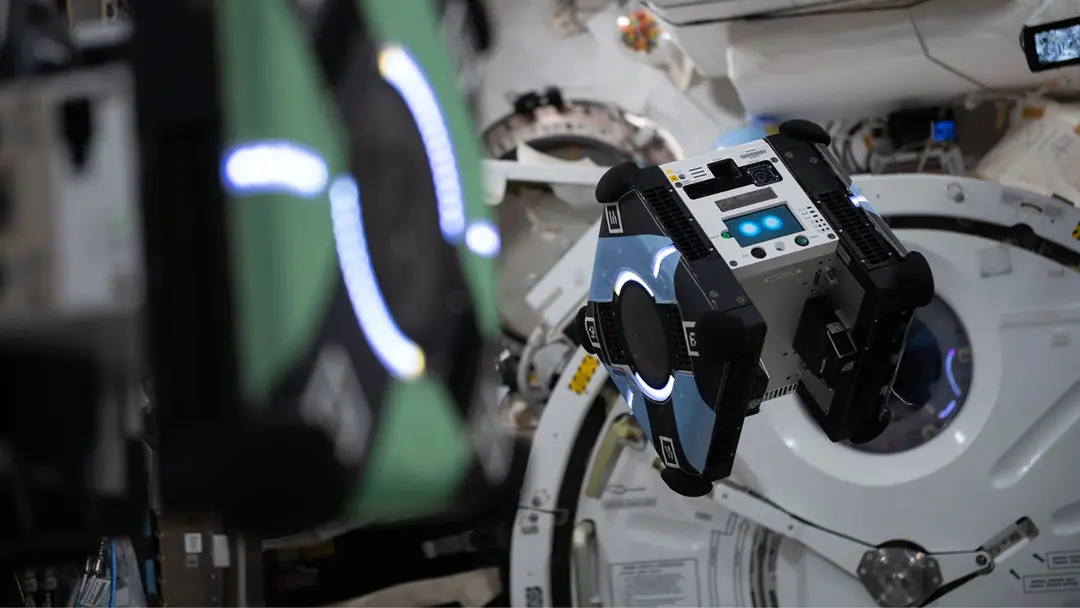

NASA’s Astrobee Learns To Improvise In Orbit

On the International Space Station, NASA and Stanford plugged a machine learning warm-start planner into Astrobee, a toaster-sized free-flying robot, and told it to navigate the station’s cable jungle on its own. Same hardware, same thrusters, no extra compute. The difference: the AI now guesses a feasible path from prior experience instead of planning every trajectory from scratch. That change alone made Astrobee move about 50 to 60 percent faster while maintaining safety constraints.

Space robots have long lived under the tyranny of cold-start planning and mission control’s fear of unverified autonomy. Every trajectory had to be recomputed and re-verified with painfully limited compute and a lot of human oversight. A warm-start planner that respects safety but slashes planning time is a quiet revolution: robots can actually keep up with their environment rather than waiting for Earth to tell them what to do.

Why it matters

This is bigger than one cute ISS robot. It points to:

- On-orbit assembly that does not stall for ground approvals.

- Satellite servicing where robots can improvise around debris, solar drift, and thermal noise.

- Deep-space missions where communication lag no longer paralyzes motion planning.

The bottleneck shifts from "Can autonomy be trusted?" to "How bold can we make the mission design if the robots can handle more of the moment-to-moment decisions?"

The Deets

- System: Astrobee free-flying robot on the ISS.

- Upgrade: Machine learning warm-start planner that proposes good candidate paths.

- Performance: Around 50 to 60 percent faster navigation without breaking safety rules.

- Impact: Demonstrates that machine learning can safely run in orbit for real robotics tasks.

Key takeaway

A robot just proved that machine learning can safely run in orbit, making autonomy not a moonshot for the future but the new default expectation.

🧩 Jargon Buster - Warm start: Starting an optimization or planning process from a good initial guess rather than from scratch, which often makes solutions faster and more efficient.

Source: Robotics Herald

A Bionic Hand With Its Own "Subconscious"

Engineers at the University of Utah rebuilt a commercial prosthetic hand from the inside out. They packed its fingertips with pressure and optical proximity sensors and trained an AI neural network to produce "natural grasping behavior." Instead of asking users to micromanage every finger joint, the system takes simple EMG signals like "grip" or "release" from residual muscles and lets the AI handle the micro-movements.

The result feels less like operating a device and more like delegating to a robotic co-pilot. The hand senses contact, predicts when objects are about to slip, and automatically adjusts grip force in real time. It is not brain-controlled or voice-controlled. It is AI simulating the subconscious motor layer your nervous system usually handles without you noticing.

Why it matters

Most prosthetic users juggle five independent mechanical tools with clumsy control schemes. This system flips the script:

- Humans supply intent, not precise instructions.

- The AI handles continuous adjustments and error correction.

- The prosthesis starts to look like an exosuit for the hand, a model that could generalize to other wearable robotics.

This is not just a better prosthetic; it is a prototype for everyday augmentation, where devices quietly optimize around you.

The Deets

- Sensors: Pressure and optical proximity sensors in the fingertips.

- Control: EMG signals for high-level commands, neural network for fine control.

- Behavior: Detects contact, predicts slip, self-corrects grip force.

- Target users: Primarily amputees, but the control logic points toward broader exoskeleton and wearable use cases.

Key takeaway

Give a prosthetic its own instincts and you stop building a hand. You start building wearable robotics for everyone.

🧩 Jargon Buster - EMG (electromyography): A method of reading electrical activity from muscles, often used to translate muscle signals into commands for prosthetics or interfaces.

Source: Robotics Herald

Mistral’s Devstral 2 And The New Coding Stack

French startup Mistral launched Devstral 2, an upgraded family of coding models, plus Vibe CLI, a terminal-native coding agent. The flagship 123B Devstral 2 model hits 72.2 percent on SWE-bench Verified, essentially matching top open-weight rival Deepseek V3.2 while being about 5 times smaller.

The compact Small 2 variant comes in at 24B parameters and is designed to run on a single GPU or even a laptop CPU, making serious coding assistance possible on consumer hardware. Devstral 2 arrives with a "modified" MIT license that adds restrictions for companies with $20M or more in monthly revenue, while Vibe CLI is free to use under an Apache 2.0 license. Vibe scans codebases, proposes multi-file changes, and acts like a surgical agent for your repo.

Why it matters

Mistral is shipping aggressively. After Mistral 3, Devstral 2 pushes the open-weight coding frontier in two directions:

- Near-frontier performance that is still self-hostable.

- A small model that makes local and offline dev agents viable on everyday hardware.

Licensing signals another trend: differential access where small teams get more permissive rights than large enterprises.

The Deets

- Devstral 2 (123B): 72.2 percent SWE-bench Verified, strong open-weight competitor, 5 times smaller than some top models.

- Devstral Small 2 (24B): Targets single GPU or CPU use, competitive against other open-weight small models.

- Licensing: Modified MIT license with revenue-related limits; Vibe CLI under Apache 2.0.

- Vibe CLI: Terminal-native coding agent that can scan repositories and apply coordinated, multi-file edits.

Key takeaway

We are heading toward a world where developers' coding agent run next to their terminal, not in someone else’s cloud, and still feels close to frontier quality.

🧩 Jargon Buster - SWE-bench Verified: A benchmark that measures how well coding models can fix real-world GitHub issues, verifying that the generated patches actually solve the problem.

Source: The Rundown AI

GLM-4.6V: Open-Source Multimodal With Native Tools

Z.ai released GLM-4.6V, an open-source multimodal model with native function calling aimed at complex agentic workflows. Rather than just seeing and describing images, GLM-4.6V is built to reason across text and visuals and then call tools based on that understanding.

Positioned as an open alternative in the agent space, GLM-4.6V slots neatly into the same world that AAIF is trying to standardize: models that can perceive, decide and act.

Why it matters

If you want truly useful agents, they need to:

- See and interpret real-world artifacts (documents, screenshots, diagrams).

- Call tools and APIs based on that context.

- Do it in a way that developers can inspect and self-host.

GLM-4.6V is another sign that open multimodal agents are not going to be monopolized by a few U.S. labs.

The Deets

- Type: Open-source multimodal model.

- Superpower: Native function calling designed for agent workflows.

- Use cases: Complex tool-using agents that need to read and act on mixed media inputs.

Key takeaway

The race is no longer just about text models. The next competitive front is open, tool-using multimodal agents that can see, think, and do.

🧩 Jargon Buster - Function calling: A model’s ability to request specific tools or APIs with structured arguments, turning natural language intent into concrete actions in code or services.

Source: AI Secret, The Rundown AI

⚡ Quick Hits

- Slack to OpenAI pipeline: Slack CEO Denise Dresser is leaving to become OpenAI’s chief revenue officer, where she will oversee the company’s rapidly expanding enterprise business. More…

- Meta’s "Avocado" model: Meta is reportedly preparing a new frontier AI model codenamed "Avocado" for Q1 2026, which may be proprietary, unlike its earlier open-weight Llama releases. More…

- Microsoft’s $19B Canada bet: Microsoft is investing $19B CAD to expand AI infrastructure across Canada by 2027 and pledging to keep Canadian user data stored domestically. More…

- GPU smuggling crackdown: The U.S. Department of Justice detained two men for allegedly running a smuggling network shipping Nvidia GPUs to China, part of a probe that has already seized more than $50M in chips. More…

- Antarctic robot scout: A robotic float mapped waters beneath East Antarctic ice shelves, detecting warm water at Denman Glacier and improving sea-level rise forecasts. More…

- Samsung’s Ballie delayed: Samsung’s Ballie home robot is delayed again with no new launch date, raising questions about the viability of consumer home companions. More…

- Anthropic x Accenture: Anthropic and Accenture signed a three-year partnership to train 30,000 consultants on Claude and help enterprises move from AI pilots to measurable ROI. More…

🛠️ Tools Of The Day

- ChatTube: Turn any YouTube video into an interactive conversation with AI, including instant summaries, translations and Q&A, now featured on Oncely.com with a hefty discount. More…

- Vibe CLI: Mistral’s terminal-native coding agent that scans your repo and applies multi-file changes, powered by Devstral 2 and licensed under Apache 2.0 so developers can adopt it freely. More…

- TRAE (The Real AI Engineer): A highly price-competitive coding agent focused on shipping practical dev workflows rather than flashy demos. More…

- KaraVideo: A unified hub for AI video models, giving creators one place to experiment across multiple video generation systems. More…

- Momen AI: Bridges the gap from "vibe" to viable product, powering Lovable-style no-code app creation by translating fuzzy ideas into functional software. More…

- Claude Code: Anthropic’s coding assistant with deep-context understanding and a new Slack integration, making it easier to bring code reasoning into team chat. More…

- GLM-4.6V: Open-source multimodal model with native tool use for building sophisticated vision-plus-text agents. More…

Today’s Sources: AI Secret, The Rundown AI, Robotics Herald