Meta Gets Robotic; Synthetic Actors; R U Making Workslop?

Metabot: Meta’s Humanoid Robotics Gamble

Meta CTO Andrew Bosworth confirmed in an interview that Metabot is the company’s most ambitious new project since AR/VR - aiming to create the software backbone for humanoid robots rather than just build flashy machines. Is this an attempt to rotate quickly to the next big thing beyond pure AI chatbots? Perhaps.

- From hardware showcase to platform play ... Bosworth said Meta doesn’t intend to mass-produce robots. Instead, the company wants to build the control stack - software that coordinates perception, planning and dexterous manipulation - then license it to hardware makers. The analogy: Android for smartphones or Oculus for VR.

- Why now? According to Bosworth, locomotion (walking, balancing, stairs) is “largely solved” by the field. The unsolved problem - and the piece Meta wants to own - is dexterous manipulation. Picking up a glass of water or folding laundry requires millisecond feedback loops, tactile sensing, and fine motor coordination. Today’s humanoids can flip, dance, or carry boxes, but most still crush or drop delicate objects.

- AR-scale investment... Meta expects the spend on Metabot to hit augmented reality–level budgets, signaling billions of dollars over multiple years. Bosworth stressed that Zuckerberg approved the project as a long-term bet, with timelines stretching a decade or more.

- Strategic positioning... According to PCMag, Meta wants its system to become the “backbone” layer across robotics - hardware-agnostic, standardized and licensable. Instead of competing head-on with Tesla’s Optimus or Figure AI, Meta wants those companies (and many others) to depend on Meta’s software.

- Ecosystem and control. Bosworth compared this to Meta’s Oculus/Quest approach: use subsidized early hardware to shape developer ecosystems, then pivot to being the layer everyone must plug into. If Metabot becomes the de facto manipulation OS, Meta could quietly control how humanoids interact with the physical world, no matter who builds the bodies.

How Do They Stack Up?

Meta (Metabot)

- Strategy: Software-first, hardware-agnostic. Meta wants to create the “backbone” OS for robots, solving dexterous manipulation and control, then licensing it broadly.

- Core Focus: Fine motor skills (e.g., pouring water, folding laundry, handling fragile objects).

- Business Model: Platform play - think Android for humanoids. Meta doesn’t want to build millions of robots; it wants every hardware maker to depend on its control stack.

- Investment Scale: “AR-level” - billions over a decade, signaling this is Meta’s next platform bet after VR/AR.

Tesla (Optimus)

- Strategy: Vertical integration. Tesla builds the robot and trains it on real-world tasks inside its factories.

- Core Focus: Utility within Tesla’s own supply chain - repetitive factory work, logistics tasks, then consumer applications down the line.

- Business Model: Proprietary stack (hardware + software + data), designed to give Tesla a closed-loop advantage. Musk has floated a future where Optimus is “more valuable than Tesla’s cars.”

- Strength: Tesla has unmatched access to real-world training environments (factories, warehouses), which can accelerate task learning.

Figure AI

- Strategy: Full-stack humanoid company, focused on general-purpose workers. Their Figure 01 robot is already piloted with BMW and others.

- Core Focus: Workforce replacement for labor shortages - logistics, warehouses, potentially eldercare.

- Business Model: Hardware + SaaS subscription for “robot labor.” Targeting B2B markets before consumer rollout.

- Strength: $850M+ in funding (from Microsoft, Nvidia, OpenAI, Jeff Bezos) plus partnerships with enterprise customers gives them a strong go-to-market position.

Boston Dynamics (Hyundai-owned)

- Strategy: Robotics pioneer moving slowly into humanoids after years of quadrupeds (Spot, Atlas). Their humanoid robots are mainly demos today.

- Core Focus: Engineering feats and proof-of-concept. Atlas can backflip, dance, and carry loads - but still struggles with dexterity.

- Business Model: Hardware sales (Spot) and enterprise robotics. Humanoids remain R&D-heavy with no clear commercial roadmap yet.

- Strength: Decades of robotics expertise, unmatched in locomotion and balance.

Other major players: Apptronik, Agility Robotics and 1x. In China a lot of attention is on Unitree Robotics.

Key Differences

- Platform vs Product: Meta is the only major player betting on being the software layer across the industry instead of selling robots directly.

- Dexterity vs Locomotion: Tesla, Figure, and Boston Dynamics all emphasize “look, it walks!” demos. Meta argues walking is solved; true market value lies in manipulation and adaptability.

- Ecosystem Play: Meta wants to repeat the Oculus/Quest pattern: subsidize early development, attract devs, then license the backbone to everyone else. Tesla and Figure, by contrast, want to own both the hardware and the end-customer relationship.

- Timelines: Tesla and Figure are already piloting robots in factories. Boston Dynamics shows off flashy demos. Meta is early-stage, aiming for longer-term dominance through infrastructure control.

Humanoid robots could be a trillion-dollar market over the next two decades. The strategic question is:

- Will value accrue to platform players (Meta’s OS model)?

- Or to vertically integrated manufacturers (Tesla, Figure, Boston Dynamics)?

If Meta succeeds, its software could be the unseen layer running inside rivals’ robots. If Tesla and Figure succeed, Meta risks being a late entrant without the real-world training data needed to make its platform indispensable.

Read more: AI Breakfast, PCMag

Vibes: From Generative Novelty to Distribution Layer

Alongside robotics, Meta is reframing AI video as something social, not just experimental. And it's getting roasted by some for it.

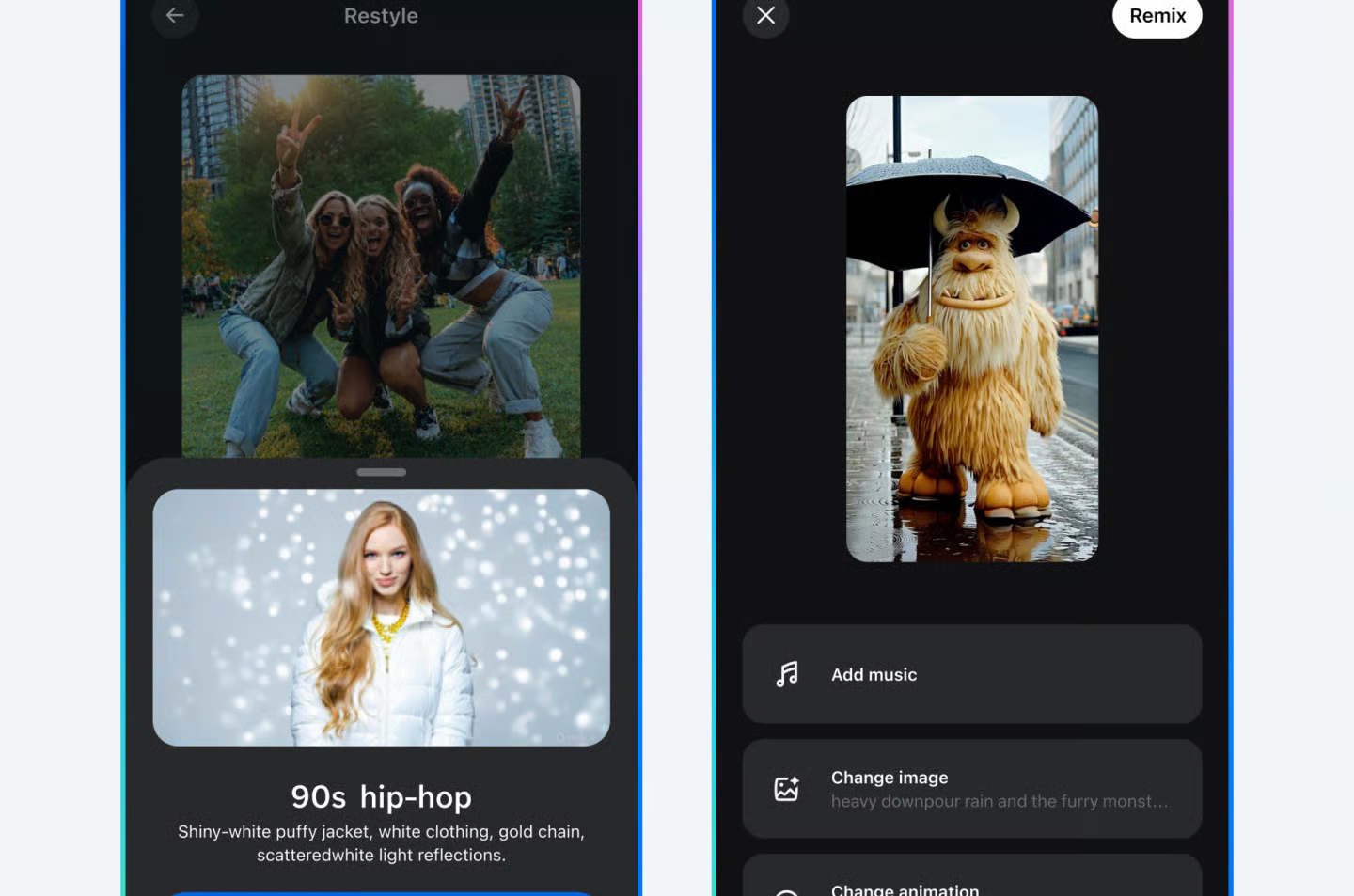

- From prompts to feeds. Early AI video platforms focused on novelty (“type a prompt, get a surreal clip”). Vibes changes that into networked media - a TikTok-like feed where every clip carries its generating prompt. That creates a dual artifact: the content and the recipe.

- Participatory loop. Users don’t just watch; they remix. If someone makes an AI music video with “anime-style neon Tokyo street scene,” others can tweak the prompt and spawn variations, amplifying replication and engagement. It’s the TikTok duets mechanic for AI.

- Ecosystem stakes. Meta isn’t just seeding an AI toy - it’s trying to control the distribution surface for generative media. By making Vibes the default showcase, it positions itself as the YouTube/TikTok layer for AI-native video creators, ensuring those users stay inside Meta’s ecosystem.

According to Business Insider, people are calling this AI Slop "that no one really asked for." Well, how do you do sir?

Read more: AI Breakfast, Business Insider

🎬 Synthetic Actors Test Hollywood’s Limits

AI talent studio Xicoia says its virtual performer Tilly Norwood is in talks with major agencies - drawing sharp backlash from human actors threatening to boycott any firm that signs a “synthetic” client.

Tilly debuted in comedy sketches with a designed backstory, voice and arc; the studio (a spin-out of Particle6) frames her as the next bankable star.

The speed of the shift - from studios dismissing AI personas to exploring deals - signals a trial balloon for lower-risk, infinitely “available” talent that can iterate without SAG contracts or reshoots. Expect fierce labor pushback, tangled rights around likeness and voice and new disclosure norms for audience trust.

Read more: The Rundown AI

Apple’s Quiet Siri Re-Re-Rebuild

Apple employees are dogfooding an internal, ChatGPT-style app codenamed “Veritas,” stress-testing features like voice-driven search across personal data and photo edits.

It rides a stack (“Linwood”) mixing in-house and third-party models as Apple scrambles after delays that pushed a fuller Siri overhaul into March 2026.

The strategy: fold capabilities straight into Siri rather than ship a separate chatbot - sensible given the crowded assistant market, but clock’s ticking amid talent churn and a fast-moving field.

Read more: The Rundown AI, AI Breakfast

‘Workslop’ - The Hidden AI Tax At Work

A Stanford/BetterUp Labs survey of 1,100+ U.S. workers finds ~15% of workplace content is now “workslop”: AI-polished but shallow output that forces colleagues to spend ~116 minutes fixing each instance - an invisible productivity tax pegged at ~$186/employee/month.

Beyond wasted time, “slop” corrodes trust and collaboration, with recipients rating senders as less reliable and creative. AI helps when it augments judgment; it hurts when it replaces it.

Read more: The Rundown AI, AI Secret

Google Stacks The Upgrades: Flow, NotebookLM, Labs

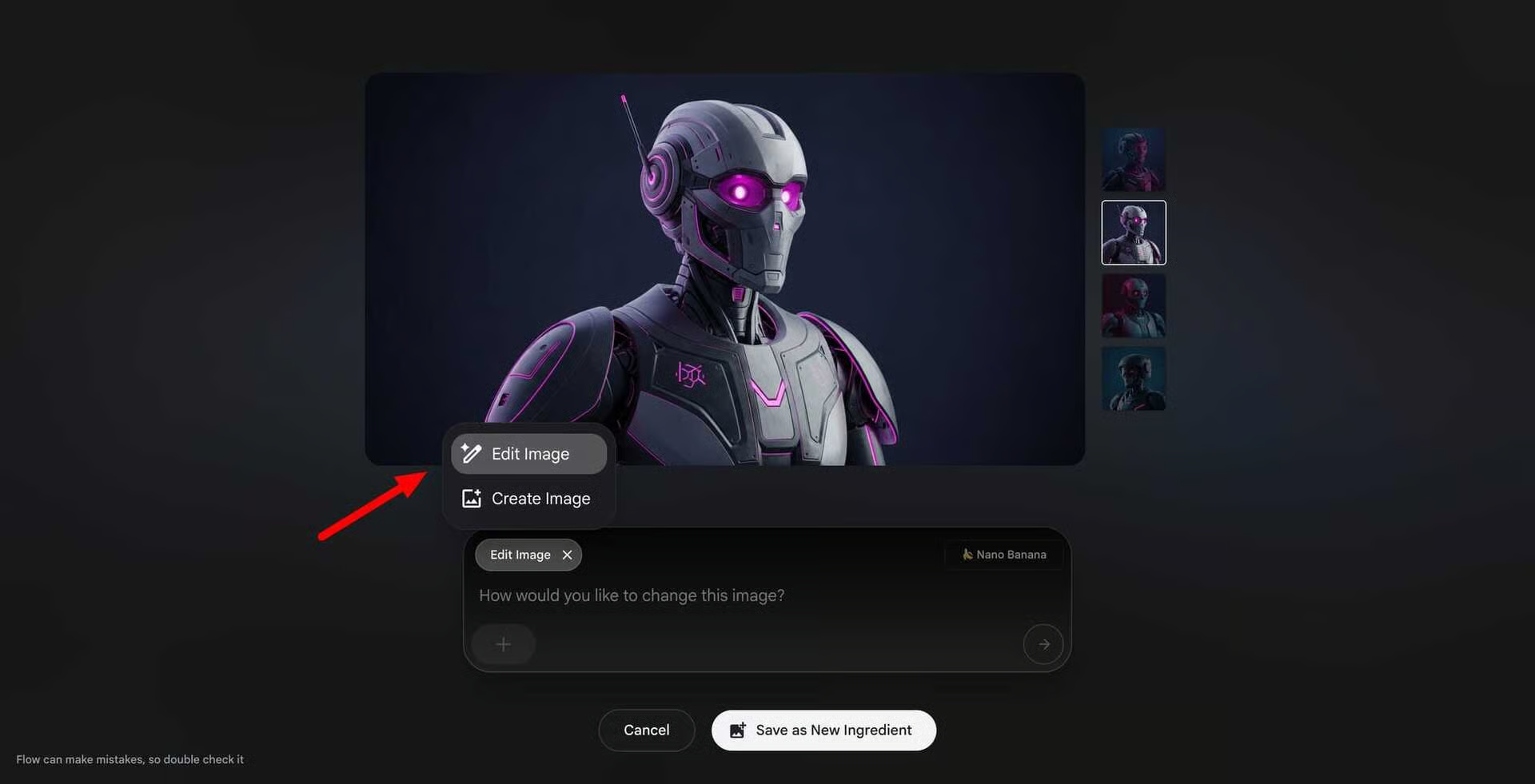

Google is turning Flow into a modular creation environment (in-canvas edits with the Nano Banana layer and a prompt expander for structured styles), while NotebookLM adds persistent chat histories to support multi-session research. In YouTube Music, limited tests of AI radio hosts add context between songs.

The throughline: AI as embedded layer, not bolt-on ... helping users stay in flow instead of hopping tools.

Read more: AI Breakfast

🏌️ The Ryder Cup As A Pop-Up Smart City

At Bethpage Black, HPE wired the Ryder Cup with 650 Wi-Fi 6E nodes, private 5G, Nvidia-powered private cloud and computer vision - routing crowds, predicting lines, flagging anomalies, and auto-cutting highlights for a quarter-million fans. Staff asked an on-site AI ops assistant questions instead of paging manuals.

Sports are quietly becoming real-time, data-orchestrated venues, shifting value from screens to the infrastructure that choreographs the day.

Read more: AI Secret

Build An AI Calendar Agent In n8n

Spin up an n8n workflow with an AI Agent node (e.g., GPT-4o mini), attach Google Calendar as a tool, and let natural-language messages like “Dinner 7–9 p.m. tonight” create events directly. Add {$now} to the system message for reliable time context, and extend to WhatsApp/Telegram for chat-based scheduling.

Read more: The Rundown AI

🛠️ Tools & Launches — Expanded

- ChatGPT Pulse - Proactive daily briefs that synthesize your chats, emails, and calendar into morning cards. Early glimpse of agentic assistants that act without prompting but with guardrails (daily cap, feedback loop).

- HunyuanImage 3.0 (Open Source) - Tencent’s latest text-to-image model claims parity with top closed systems; open weights invite rapid community fine-tuning for niches (product renders, stylized ads).

- Kilo Code (JetBrains) - Open-source, model-agnostic coding assistant now inside IntelliJ/PyCharm, with pay-per-use economics and 325k+ installs signaling strong developer pull.

- GitHub Copilot CLI - A terminal-native agent that reads/writes files, runs commands, and speaks MCP; defaults to Claude Sonnet 4 but switchable - useful for repo surgery and scripted chores.

- Ray3 (Luma) - HDR, reasoning-guided video generation with keyframe control; strong for cinematic sequences, previz, and ad concepts that need iteration with taste rather than one-shot prompts.

- toopost - Centralizes RSS, Reddit, Telegram, Google & Facebook feeds, layering AI summaries, fact-checks, and drafting - handy for comms teams watching many channels.

- exa-code - Retrieval for coding assistants that hunts hyper-relevant web context to reduce hallucinations; slot it behind your agent to ground long-tail API usage.

- myNeutron - “One memory for everything” knowledge hub; practical if your team keeps re-explaining context to different AI tools.

- Creatium - A training-focused platform that lets teams generate interactive content, complete with AI coaches and role-play scenarios.

- Cloudflare VibeSDK - A one-click framework for deploying scalable AI-powered coding environments on Cloudflare infrastructure. VibeSDK emphasizes speed, global availability and the ability to spin up private “vibe coding” workspaces instantly.

- WebTrafficWatch - An AI analytics companion that ingests Google Analytics 4 and Search Console data, then auto-generates weekly reports flagging significant traffic shifts.

Read more: The Rundown AI, AI Secret

🗞️ Everything Else Today

- Meta talent moves: Ex-OpenAI researcher Yang Song joins Meta’s MSL group.

- Gemini 2.5 Flash/Flash-Lite updates: Better tool-use, instruction following, and efficiency for agent chains.

- OpenAI hiring monetization lead: New Applications org under Fidji Simo seeks an ads/monetization exec as Pulse and proactive agents mature.

- Black Forest Labs reportedly raising up to $300M (toward a $4B valuation) for AI imaging.YouTube Music tests AI hosts that add context between tracks.

Today’s Sources: