OpenAI Omnipresence? Meta Goes Giga; Train Your Robot

Today's AI Outlook: 🌤️

OpenAI Wants Hardware On Your Body, Desk And Eventually Everywhere

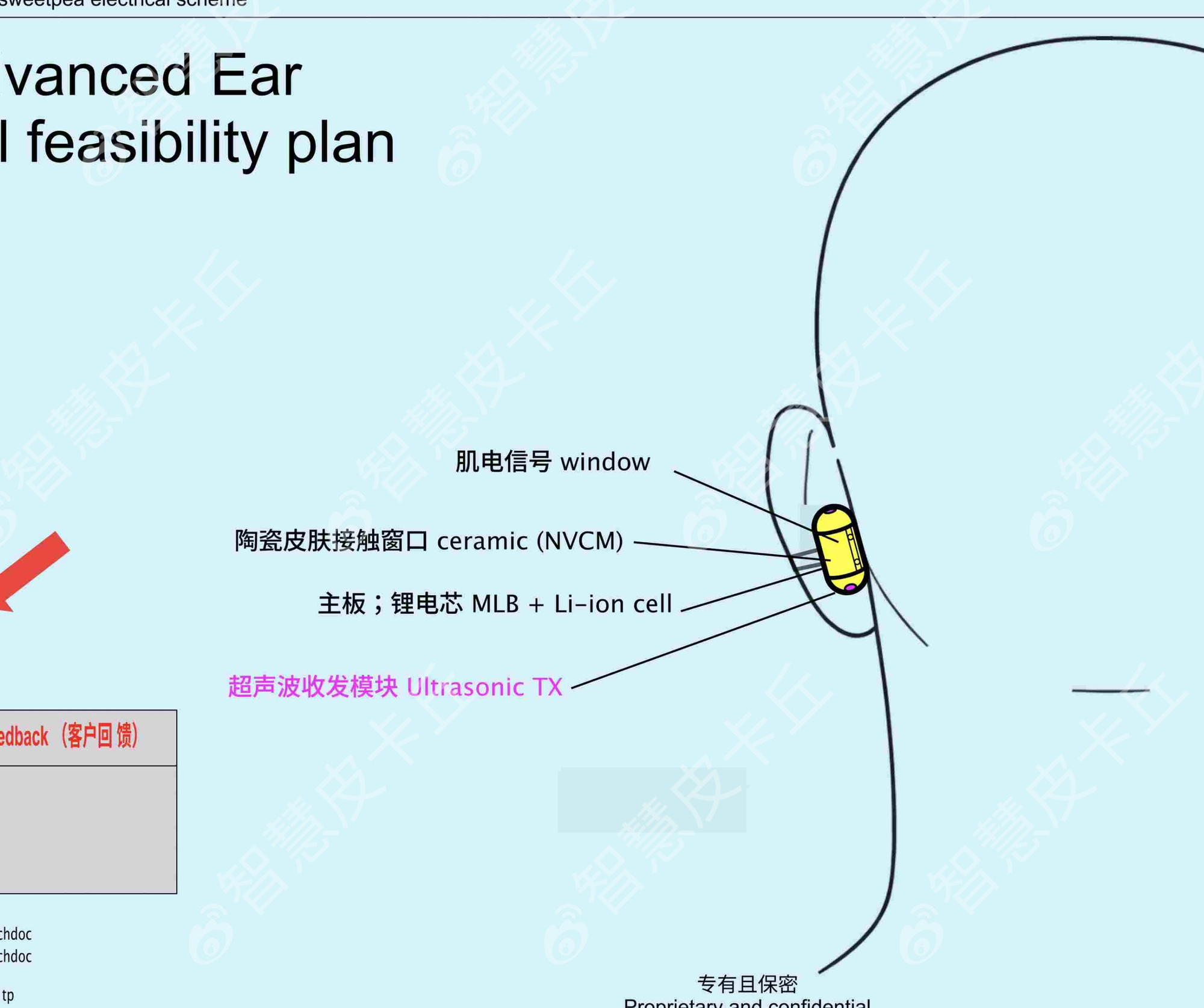

OpenAI is reportedly gearing up for a five-device hardware lineup, with supply chain partners preparing for production capacity through Q4 2028. According to manufacturing sources, the first device out of the gate is Sweetpea, an audio-first AI wearable designed in collaboration with Jony Ive’s design team. Think less AirPods, more “AI-native companion you actually talk to.”

Sweetpea is described as a metal, eggstone-shaped core with removable behind-the-ear modules. It is expected to ship as early as September, with ambitious first-year volumes of 40M to 50M units. Under the hood, this is no accessory-tier gadget. Reports point to a 2nm smartphone-class processor, likely Samsung Exynos, plus a custom chip built specifically for real-time voice control capable of triggering iPhone-level actions.

Why it matters

This is OpenAI’s clearest signal yet that AI is moving off screens. If Sweetpea lands as described, it positions AI not as an app you open, but as a presence you wear. That is a fundamental shift in how users interact with models, and it explains why the bill of materials reportedly looks closer to a smartphone than earbuds.

The deets

- Foxconn instructed to prep capacity for up to five OpenAI-branded devices

- Additional form factors rumored include a home device and a pen-like AI interface

- Sweetpea’s internals reportedly rival flagship phones

- Separately, OpenAI acquired Torch, a four-person medical data startup, in a $100M equity deal to power a new ChatGPT Health service

Key takeaway

OpenAI is building the interface layer for everyday life, and hardware is how it locks that in.

🧩 Jargon Buster - AI-native hardware: Devices designed around continuous AI interaction, not retrofitted with an assistant as an afterthought.

Source: AI Breakfast

⚡ Power Plays

Meta, Microsoft Bet Big On Compute - With Very Different Vibes

Meta unveiled Meta Compute, a top-level initiative to build tens of gigawatts of AI infrastructure this decade, scaling to hundreds over time. The company has committed $600B to U.S. infrastructure by 2028 and locked in 20-year nuclear power agreements to keep the lights on. This comes as Meta trims about 10 percent of Reality Labs, refocusing hard on frontier AI.

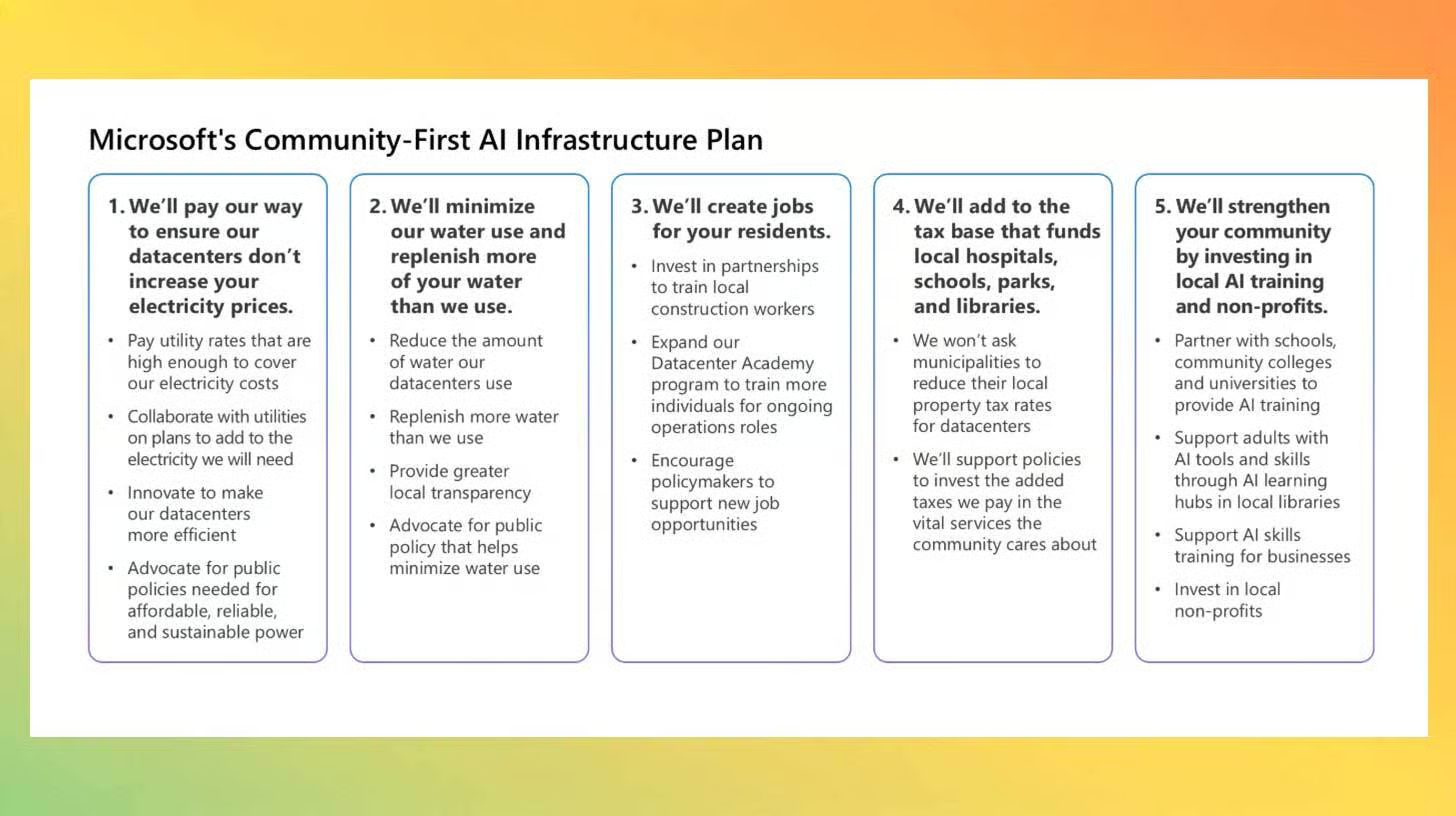

Meanwhile, Microsoft launched its Community-First AI Infrastructure plan, promising data centers that do not raise local power bills, replenish more water than they consume and pay full property taxes without incentives.

Why it matters

AI’s bottleneck is no longer talent or models. It is electricity, water, and political permission. Meta is playing offense by brute-force scaling. Microsoft is playing defense by trying to stay welcome in local communities that are increasingly skeptical of data centers.

The deets

- Meta Compute is co-led by infrastructure chief Santosh Janardhan and Daniel Gross

- Former national security official Dina Powell McCormick will handle government partnerships

- Microsoft pledged a 40 percent reduction in water-use intensity by 2030

- New Microsoft facilities will rely on closed-loop cooling, avoiding local drinking water

Key takeaway

The AI race is becoming an infrastructure arms race, and community backlash is now a strategic risk.

🧩 Jargon Buster - Gigawatt-scale compute: Data center capacity measured at the level of entire cities’ power consumption.

Source: The Rundown AI

🧠 Research & Models

Humanoid Robots Look Great On Video, Fuzzier In Reality

Matrix Robotics unveiled MATRIX-3, its third humanoid robot, promising improved human-like motion, touch and cognition. The announcement came with slick demos and pilot roadmaps. What it did not include was confirmation that any Matrix humanoid has shipped.

Separately, researchers at Keio University showed robots can learn adaptive touch using far less training data, cutting grasping errors by over 40 percent in-range and 74 percent out-of-range.

Why it matters

Humanoids are stuck between storytelling and supply chains. While Matrix continues to iterate conceptually, academic work is quietly solving the real blockers: adaptation, tactile intelligence, and deployment outside pristine lab environments.

The deets

- Matrix joins Figure in the “premium humanoid, long pilots” category

- New tactile systems infer intent, not just motion replay

- Fewer demos mean lower deployment costs in messy real-world settings

Key takeaway

The future of robots is less about cinematic launches and more about boring reliability in unpredictable environments.

🧩 Jargon Buster - Tactile adaptation: A robot’s ability to adjust grip and force dynamically when objects behave differently than expected.

Source: Robotics Herald

Your Phone Just Became a Robot Trainer

NoeMatrix has introduced RoboPocket, a lightweight kit that turns an everyday smartphone into a professional-grade robot data capture system. By tapping into a phone’s camera, LiDAR, and IMU, RoboPocket records synchronized spatial and motion data for training embodied AI. A built-in feedback layer scores data quality in real time, letting users know instantly whether a recording is usable or junk.

Instead of relying on custom rigs or lab-only setups, RoboPocket turns a pocket device into a mobile sensing platform capable of producing structured datasets on the fly.

Why it matters

Robot training data has long been expensive, slow, and painfully centralized. RoboPocket challenges that model by pushing data collection into the real world, using hardware teams already own. If the system performs as advertised, it lowers the barrier to entry for robotics teams and shifts the bottleneck away from sensors and toward deployment speed.

The deets

- Uses smartphone camera, LiDAR, and IMU for synchronized capture

- Records shared timestamps and SLAM coordinates across multiple phones

- Replaces sensor rigs that often cost $10,000+

- Enables faster dataset creation in real-world environments, not simulations

Key takeaway

If RoboPocket scales, robot learning moves out of controlled labs and into everyday spaces. The edge shifts to teams that can capture messier reality faster and retrain models continuously in the field.

🧩 Jargon Buster - SLAM (Simultaneous Localization and Mapping): A method that lets robots understand where they are while mapping their surroundings at the same time.

Source: Robotics Herald

🛠️ Tools & Products

Claude Cowork Brings Agentic AI To Your Desktop

Anthropic released Claude Cowork in research preview for Max subscribers on macOS. The desktop agent can read, edit and organize files within a selected folder, autonomously planning and executing multi-step workflows with no coding required.

Why it matters

This pushes AI agents directly into everyday knowledge work, not just developer tooling. It is a meaningful step toward AI coworkers that actually touch your files.

The deets

- Built on the Claude Agent SDK

- Supports browser automation and existing connectors

- Folder-level permissions aim to reduce risk from accidental deletions

Key takeaway

Agentic AI is leaving the terminal and moving onto the desktop.

🧩 Jargon Buster - Agentic workflow: An AI system that plans, executes, and adapts tasks autonomously rather than responding one prompt at a time.

Source: AI Breakfast, The Rundown AI

⚡ Quick Hits

- Meta Compute launches as Meta trims Reality Labs headcount.

- Google Veo 3.1 upgrades video generation for creators.

- McKinsey now counts 25,000 AI agents among its workforce.

- Pentagon begins integrating Grok into military networks.

🧰 Tools Of The Day

Nume – The world’s first AI CFO built for startups and SMEs. Connects to Xero and QuickBooks in minutes to deliver real-time financial insight without the spreadsheet grind.

EmailVerify – Validates email lists with 99.9% accuracy, flagging disposables, spam traps, role accounts, and catch-all domains in real time.

Infinite Creator – Crawls your website, learns your brand voice, and generates months of social content complete with images, captions, and scheduling.

TeeDIY – Turns photos, prompts, or templates into custom tees and hoodies, with smart tools that handle backgrounds, layouts, and styling automatically.

DailyScope.ai – Tracks global news coverage to surface the most-covered countries, themes, and media patterns across international outlets.

ASOfuel – Analyzes your app store listing, extracts keywords from screenshots, mines competitor reviews, and delivers prioritized ASO actions with AI.

daily.dev Recruiter – Matches open roles to engineers based on what they read and code, then delivers warm, opt-in candidate intros.

Hyperfox – Automates order intake from email, EDI, web forms, and field sales, validating everything against business rules before pushing to ERP or TMS systems.

Today’s Sources: AI Breakfast, Robotics Herald, The Rundown AI