OpenAI Triple Play; Google Seizes Day; Creepy 'Snail Bots'

Today's AI forecast: 🌥️

OpenAI’s Images, Wet Lab Work, Voice Boost

OpenAI dropped a coordinated salvo that hits creation, science and infrastructure all at once.

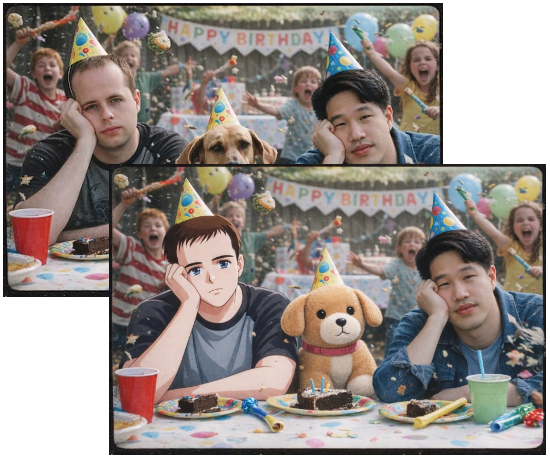

First up: GPT Image 1.5, now live in ChatGPT and via API. It delivers up to 4x faster image generation (finally), far stronger text rendering, and something users have begged for forever: consistent edits without regenerating the entire image. Faces stay the same. Lighting stays put. Typography mostly behaves. A new Images workspace adds presets, curated prompts, and style starters, shifting image creation from novelty to workflow.

But the bigger flex happened outside pixels. OpenAI partnered with Red Queen Bio, putting GPT-5 into an active wet lab. The model dynamically optimized molecular cloning protocols based on live experimental feedback, delivering a reported 79x efficiency gain. This was not just automation. The model recombined biological concepts in novel ways, marking a serious step toward AI as a co-discoverer, not just a calculator.

Rounding it out, OpenAI upgraded its voice stack, launching three new Realtime API models that cut transcription errors, reduced text-to-speech word error rates by 35%, and improved instruction-following by 22%, with expanded multilingual support. The company also quietly rolled back its automated model router, restoring predictable defaults after users complained about inconsistent results.

Why it matters

This was was platform stabilization plus frontier signaling. Image parity with Google buys time. Wet-lab success pushes AI deeper into science. Voice reliability moves agents closer to real work. Together, it reframes OpenAI as less chaotic lab and more operating system for intelligence.

The Deets

- GPT Image 1.5 tops Artificial Analysis and LM Arena leaderboards for image generation and editing

- Preserves composition, faces and lighting across edits

- New Images workspace with presets and curated styles

- GPT-5 achieved a 79x efficiency gain in molecular cloning with Red Queen Bio

- Voice models cut WER by 35% and boosted instruction-following by 22%

- Automated model router removed after user backlash

- George Osborne, former UK Chancellor, tapped to lead OpenAI’s global Stargate data center expansion

- Chief Communications Officer Hannah Wong steps down in January

Key takeaway

OpenAI is no longer just shipping features. It is reasserting control of the stack, from pixels to proteins to power plants.

🧩 Jargon Buster - Wet lab: A physical laboratory where biological or chemical experiments are performed, as opposed to simulations or purely computational work.

Source: AI Breakfast, The Rundown AI

⚔️ Power Plays

Gemini Pushes Toward Total System Control

Google is not chasing model headlines. It is absorbing workflows. The experimental CC assistant integrates directly into Gmail, Calendar and Drive, delivering a personalized “Your Day Ahead” summary and executing tasks immediately. Drafts, scheduling, follow-ups happen before you ask.

At the model layer, Gemini 2.5 Flash Native Audio tightens voice control loops, hitting 90% instruction compliance and 71.5% accuracy on complex function calls. Voice is no longer a novelty interface. It is becoming the most precise one.

Meanwhile, NotebookLM is now embedded directly into Gemini, allowing multiple notebooks to act as a RAG knowledge base. This feeds into an upgraded Gems Manager, complete with Super Gems and an auto-generating Workflow Builder.

Why it matters

Google is collapsing the gap between research, context and action. Instead of better answers, it is optimizing for less friction per decision.

The Deets

- CC assistant automates daily planning across Google Workspace

- Gemini 2.5 Flash Native Audio leads on voice precision

- NotebookLM becomes native RAG context inside Gemini

- Gems Manager introduces Super Gems and workflow automation

Key takeaway

OpenAI is winning moments. Google is winning habits.

🧩 Jargon Buster - RAG: Retrieval-Augmented Generation, where models pull from external documents or data before responding.

Source: AI Breakfast

🧠 Research & Models

Anthropic Tests Agentic Tasks Mode for Claude

Anthropic is testing Agentic Tasks Mode, reframing Claude as a system for executing work, not just chatting. Users can toggle between chat and agent mode, selecting structured paths: Research, Analyze, Write, Build or Do More.

Each path emphasizes different behaviors, from source-controlled research to validated analysis and structured document generation. A progress tracker and context manager expose task steps in real time.

Why it matters

This is not about smarter answers. It is about predictable labor. Claude is being shaped into a junior analyst you can actually manage.

The Deets

- Clear separation between chat and task execution

- Five predefined work modes

- Real-time progress tracking and context visibility

Key takeaway

Agents are getting interfaces, not just prompts.

🧩 Jargon Buster - Agentic workflow: A system where AI plans, executes, and monitors tasks autonomously within defined constraints.

Source: AI Breakfast

🐌 Snail-Bots Build, Climb and Dissolve

Researchers at the Robotics and AI Lab at CUHK Shenzhen have unveiled a swarm of palm-sized, dome-shaped “snail robots” that can link together, stack, detach, and reconfigure on the fly. Each unit moves using a magnetic track system, then switches to vacuum suction when it needs to anchor itself or support weight.

In a live demo, five of these robots assembled themselves into a temporary ramp, allowing one unit to climb higher than it could alone. Once the task was complete, the structure dissolved. Each robot disengaged and rolled away independently, leaving no permanent machine behind.

Why it matters

This is robotics moving away from rigid machines and toward adaptive matter. Instead of building one robot that does everything, CUHK’s approach lets many simple robots temporarily become whatever tool the environment demands. That flexibility is critical in search and rescue, disaster response, and remote construction, where conditions are unpredictable and infrastructure is missing.

The Deets

- Dome-shaped, palm-sized modular robots

- Magnetic movement combined with vacuum suction for load-bearing

- Capable of forming ramps, bridges, stairs, or supports

- Structures are temporary and fully reversible

- Designed for environments that are unstable or unstructured

Key takeaway

The future of robotics may not be bigger brains in bigger machines, but small bodies that know when to stick together and when to disappear.

🧩 Jargon Buster: Modular Robotics - A robotics approach where many simple units combine and reconfigure to perform tasks that a single robot could not handle alone.

Source: Robotics Herald

🚀 Tools & Products

Zoom Wants the Workflow, Not the Meeting

Zoom launched AI Companion 3.0, expanding from summaries into end-to-end workflow orchestration. Meetings now generate tasks, drafts, documents, and tracked follow-ups across chat, docs, and third-party tools. It runs on a federated AI stack, combining Zoom models with OpenAI, Anthropic, and open-source options.

Why it matters

Zoom already owns distribution. Now it wants everything after the call.

The Deets

- Context pulled from meetings, files, and chat

- Tasks and documents auto-generated

- Federated model approach avoids vendor lock-in

Key takeaway

Summaries were table stakes. Ownership of outcomes is the prize.

🧩 Jargon Buster - Federated AI stack: A system that blends multiple models from different providers instead of relying on one.

Source: AI Secret

🧪 Research & Models

When More Agents Make Things Worse

A Google and MIT study tested multi-agent systems across 180 experiments. Results varied wildly. Financial analysis tasks improved by 81%, while step-by-step tasks like Minecraft degraded by up to 70%. When a single agent already performed well, adding more often hurt accuracy and burned tokens.

Why it matters

The agent hype has limits. Structure matters more than scale.

The Deets

- 180 experiments across OpenAI, Google, and Anthropic models

- Token budgets held constant

- Multi-agent systems often underperformed single agents

Key takeaway

More agents do not equal more intelligence.

🧩 Jargon Buster - Multi-agent system: A setup where multiple AI models collaborate on a task instead of one.

Source: The Rundown AI

⚡ Quick Hits

- ChatGPT Images launches with GPT Image 1.5.

- NotebookLM integrates directly into Gemini workflows.

- FrontierScience benchmark crowns GPT-5.2 in research reasoning.

- FLUX.2 [max] debuts advanced image editing from Black Forest Labs.

Today’s Sources: AI Breakfast, The Rundown AI, AI Secret, Robotics Herald