World Modeling Arrives! Grok Best In Video; Apple Goes Quiet

Today's AI Outlook: ☀️

DeepMind Drops Project Genie - Amazing Worlds On Demand

Google DeepMind has officially opened Project Genie, a system that turns plain text or images into fully navigable 3D worlds in seconds. Type a prompt, get a playable environment rendered at 1280×720 and 24 frames per second.

You can walk, fly, or drive through it while the world generates in real time, remembers what it built, and stays visually consistent if you revisit areas. Sessions are capped at 60 seconds, not because of design choice, but because each user gets a dedicated compute chip while exploring.

This rollout is currently limited to U.S. subscribers on Google’s $250 per month AI Ultra plan, about five months after DeepMind previewed the Genie 3 model that powers it. The experience feels more like sketching reality than watching a video. You explore while the model is still thinking, correcting itself, and filling in gaps.

Why it matters

This is not about games, at least not primarily. DeepMind’s real goal is simulation at scale. Training AI agents in realistic, interactive worlds beats training them on static datasets. Robots, planners, and decision-making agents need environments that behave like reality, not frozen screenshots of it. Genie pushes AI closer to learning by doing rather than memorizing.

It also quietly escalates the race to simulate reality itself. With players like World Labs, Runway and others circling the same idea, the ability to spin up believable worlds on demand may become as foundational as text generation was in 2023.

The Deets

- Generates interactive 3D environments from text or images

- First or third-person navigation

- Real-time rendering with world memory and consistency

- 60-second sessions due to heavy compute requirements

- Available via Google AI Ultra at $250 per month

Key takeaway

AI is shifting from predicting the world to building and inhabiting it.

🧩 Jargon Buster - World simulation: A technique where AI learns inside interactive environments that behave like the real world, rather than from static datasets or examples.

⚔️ Power Plays

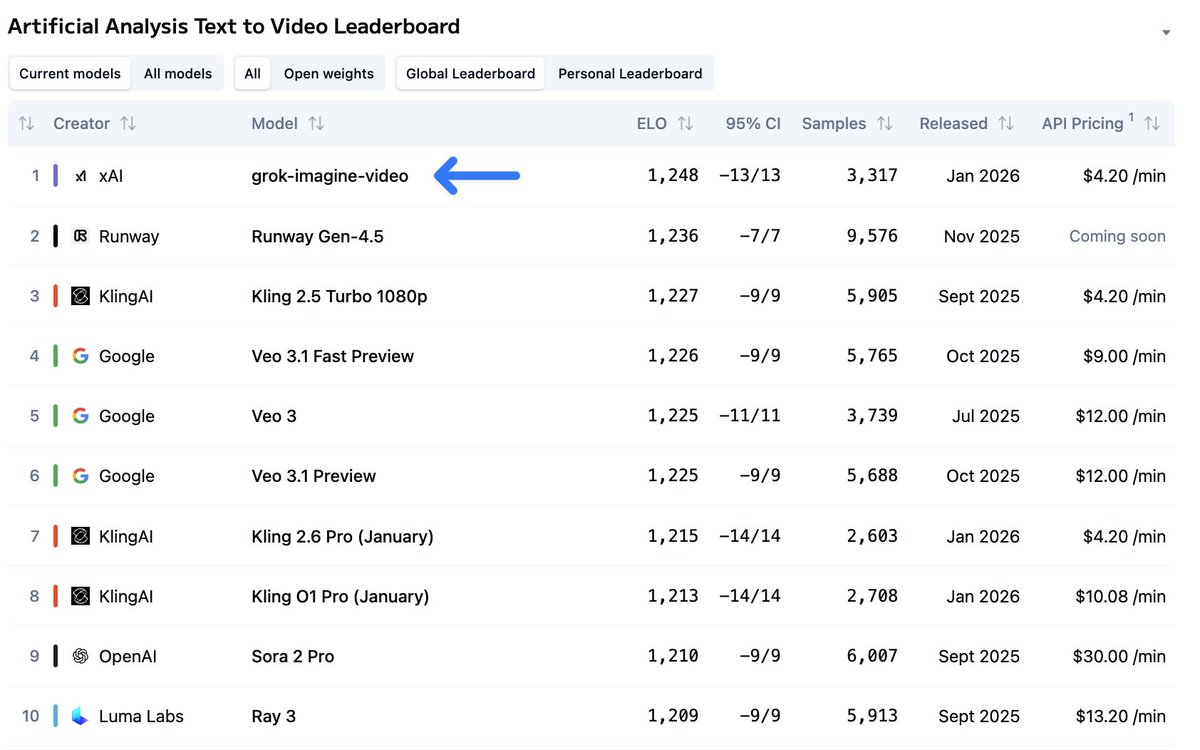

xAI’s Grok Imagine Shoots To The Top Of Video Leaderboards

xAI has launched the Grok Imagine API, and it immediately climbed to No. 1 on major text-to-video and image-to-video benchmarks. The system generates up to 15-second videos with built-in audio, dialogue, object interaction, and scene continuity. Creators and developers can edit scenes on the fly, swap objects, restyle environments, and animate characters with custom performances.

Pricing is aggressively low. Grok Imagine costs $4.20 per minute with audio included, undercutting Google’s Veo and OpenAI’s Sora by a wide margin. That price-performance combo is why it is spreading fast.

Why it matters

Video is the most expensive and compute-heavy frontier in generative AI. Winning here is less about marginal quality gains, and more about iteration speed and cost. If creators can afford to experiment freely, platforms win mindshare. xAI is betting that cheaper, faster video generation will matter more than cinematic perfection.

It also tightens the ecosystem loop. Grok feeds into X, Tesla, and potentially much larger infrastructure ambitions that tie compute, distribution, and data together.

The Deets

- Text-to-video, image-to-video, and video editing via API

- Up to 15 seconds per clip with native audio

- $4.20 per minute pricing

- Tops Artificial Analysis leaderboards

- Faster and cheaper than Sora and Veo

Key takeaway

Cheap, fast video generation is becoming the wedge that decides platform dominance.

🧩 Jargon Buster - Leaderboards: Independent benchmarks that rank AI models based on quality, speed, and consistency across standardized tests.

🛠️ Tools & Products

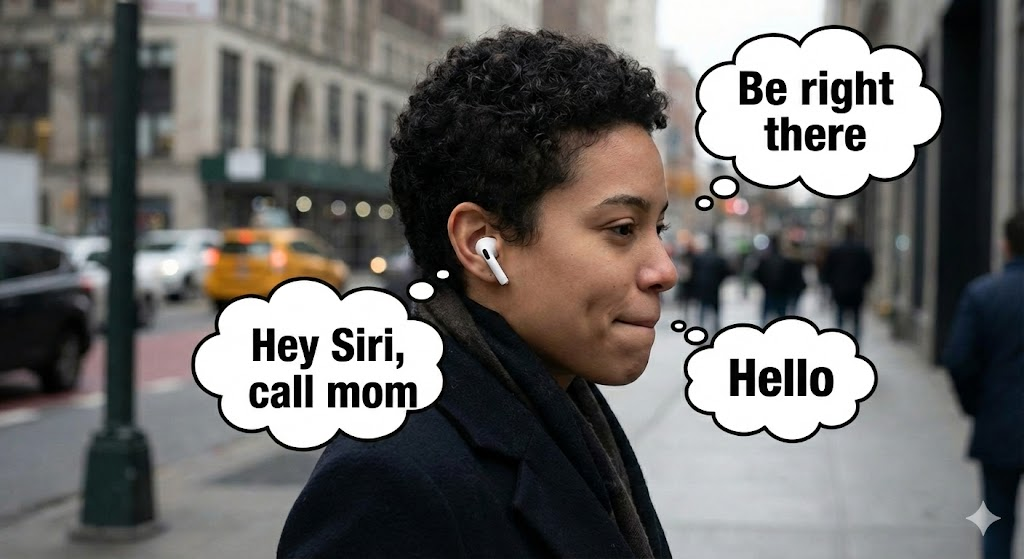

Apple’s Quiet $2B Bet On Silent Speech

Apple has acquired Q.ai for roughly $2B, its largest deal since Beats. The startup specializes in reading facial micro-movements to interpret silent speech, meaning words you mouth or whisper without sound. Patents suggest future integration with AirPods and smart glasses, and the tech traces back to the same founder who helped build Face ID.

Why it matters

Silent speech turns voice into a private interface. No microphones. No noise. No social friction. It could redefine accessibility, wearable computing, and how humans interact with AI assistants in public spaces.

The Deets

- Interprets mouthed or whispered words via facial movement

- Potential AirPods and glasses integration

- Heavy accessibility and AR implications

Key takeaway

The next interface war may be fought on your face, not your screen.

🧩 Jargon Buster - Silent speech: A method of interpreting intended speech without audible sound, using muscle and facial movement signals.

🧠 Research & Models

Humanoids Meet Reality On The Factory Floor

Galbot AI’s S1 humanoid robot is already deployed in factories, designed almost exclusively for heavy lifting. It carries a continuous 50-kilogram dual-arm payload, navigates autonomously, swaps batteries, and runs nearly nonstop.

Partners including Bosch and Toyota are testing it where reliability beats flexibility.

Meanwhile, Gartner forecasts that by 2028 fewer than 20 companies will deploy humanoids at scale, citing cost, uptime and integration challenges.

Why it matters

Factories do not reward human-like form. They reward throughput. Robots that quietly replace conveyors and manual transfer steps may scale faster than flashy general-purpose humanoids.

The Deets

- 50-kilogram continuous payload

- Designed for logistics, not dexterity

- Already live on production lines

Key takeaway

Humanoids that win will do so by becoming invisible infrastructure.

🧩 Jargon Buster - Uptime: The percentage of time a system is operational and productive without failure.

⚡ Quick Hits

- Google Maps now lets walkers and cyclists ask Gemini questions hands-free mid-navigation.

- Gemini 3 Flash adds Agentic Vision, letting AI zoom, measure, and analyze images step by step.

- Chrome’s Auto Browse can now plan trips and manage subscriptions with user approval.

🧰 Tools Of The Day

- Agentic Commerce Tools (Meta, teased) – Mark Zuckerberg previewed agent-driven shopping tools that negotiate prices, manage subscriptions, and execute purchases autonomously. Think AI personal shoppers that actually transact, not just recommend.

- Anthropic Dev Productivity Metrics – New tooling from Anthropic helps teams quantify how much AI is actually improving developer output, measuring task completion, code quality, and time saved rather than vibes.

- ARC-AGI-3 Open Toolkit – The ARC Prize released an open-source evaluation toolkit to stress-test AI reasoning on general intelligence tasks. A new benchmark playground for researchers and builders who want brutal honesty.

- InVideo + Anthropic Motion Graphics – A new AI-powered motion graphics workflow that blends structured prompts with cinematic templates, aiming squarely at marketing teams that want fast, brand-safe video.

- Suno “Sample” – Upload any sound, hum, or noise and Suno turns it into a musical element you can build tracks around. Sampling without the crate digging.

- Cursor Fast Indexing – Cursor cut codebase indexing from four hours to 21 seconds, making large-repo AI coding assistants far more usable in real production environments.

- OpenMind App Store For Robots – A skills marketplace where robots can download new capabilities like apps, dramatically lowering the barrier to reprogramming machines for new tasks.

- LingBot-VLA – A depth-aware vision-language-action model that lets robots manipulate real-world objects with minimal training, even across different robot types.

- LM Studio 0.4.0 – Adds server deployment, REST APIs, and parallel requests, pushing local LLM workflows closer to production-grade tooling.

- Decart Lucy 2.0 – Live video reskinning in real time, letting creators transform appearance, environment, and style mid-stream.

- NVIDIA Nemotron 3 Nano – A 30B-parameter model that runs 4× faster and 1.7× leaner, targeting edge and enterprise deployments where efficiency actually matters.

- MiniMax Platform Expansion – Now available across web, Mac, Windows, iOS, and Android, making its multimodal models easier to test and deploy everywhere.

- AI Motion Graphics For Music And Video (PixVerse, VUBO) – Template-driven video tools continue to collapse production time from days to minutes for creators who care more about speed than perfection.

Today’s Sources AI Breakfast, The Rundown AI, There’s An AI For That, Robotics Herald